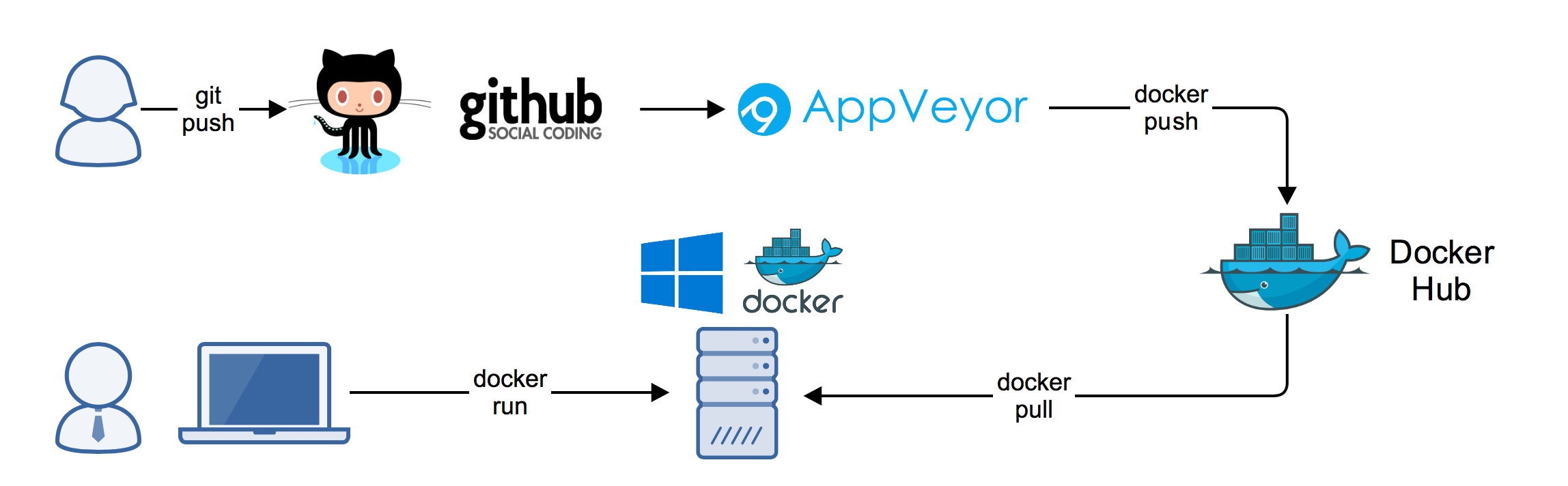

Docker is the defacto toolset for building modern applications and setting up a CI/CD pipeline – helping you build, ship and run your applications in containers on-prem and in the cloud.

Whether you're running on simple compute instances such as AWS EC2 or Azure VMs or something a little more fancy like a hosted Kubernetes service like AWS EKS or Azure AKS, Docker's toolset is your new BFF.

Learn from Docker experts to simplify and advance your app development and management with Docker. Stay up to date on Docker events and new version announcements! Docker is the defacto toolset for building modern applications and setting up a CI/CD pipeline - helping you build, ship and run your applications in containers on-prem and in the cloud. Hi Symon, glad that the tutorial has helped you, the docker version has never included the supervisor, supervisor is used for standalone VM or machine where you need a supervisor to manage the security, network and installed package. The core just work as it does for my setup for many years, it is locked within the Docker container. When setupremotedocker executes, a remote environment will be created, and your current primary container will be configured to use it. Then, any docker-related commands you use will be safely executed in this new environment. Note: The use of the setupremotedocker key is reserved for configs in which your primary executor is a. Run Docker Registry. You have completed setup. You can build registry using docker-compose command. Go to the directory, where we create docker-compose.yml file $ cd myregistry. Now run the following command: $ docker-compose up -d. Docker registry is now up, you can verify the running containers using following command: $ docker ps -a.

But what about your local development environment? Setting up local dev environments can be frustrating to say the least.

Remember the last time you joined a new development team?

You needed to configure your local machine, install development tools, pull repositories, fight through out-of-date onboarding docs and READMEs, get everything running and working locally without knowing a thing about the code and it's architecture. Oh and don't forget about databases, caching layers and message queues. These are notoriously hard to set up and develop on locally.

I've never worked at a place where we didn't expect at least a week or more of on-boarding for new developers.

So what are we to do? Well, there is no silver bullet and these things are hard to do (that's why you get paid the big bucks) but with the help of Docker and it's toolset, we can make things a whole lot easier.

In Part I of this tutorial we'll walk through setting up a local development environment for a relatively complex application that uses React for it's front end, Node and Express for a couple of micro-services and MongoDb for our datastore. We'll use Docker to build our images and Docker Compose to make everything a whole lot easier.

If you have any questions, comments or just want to connect. You can reach me in our Community Slack or on twitter at @pmckee.

Let's get started.

Prerequisites

To complete this tutorial, you will need:

- Docker installed on your development machine. You can download and install Docker Desktop from the links below:

- Sign-up for a Docker ID

- Git installed on your development machine.

- An IDE or text editor to use for editing files. I would recommend VSCode

Fork the Code Repository

The first thing we want to do is download the code to our local development machine. Let's do this using the following git command:

git clone git@github.com:pmckeetx/memphis.git

Now that we have the code local, let's take a look at the project structure. Open the code in your favorite IDE and expand the root level directories. You'll see the following file structure.

├── docker-compose.yml

├── notes-service

│ ├── config

│ ├── node_modules

│ ├── nodemon.json

│ ├── package-lock.json

│ ├── package.json

│ └── server.js

├── reading-list-service

│ ├── config

│ ├── node_modules

│ ├── nodemon.json

│ ├── package-lock.json

│ ├── package.json

│ └── server.js

├── users-service

│ ├── Dockerfile

│ ├── config

│ ├── node_modules

│ ├── nodemon.json

│ ├── package-lock.json

│ ├── package.json

│ └── server.js

└── yoda-ui

├── README.md

├── node_modules

├── package.json

├── public

├── src

└── yarn.lock

The application is made up of a couple simple microservices and a front-end written in React.js. It uses MongoDB as it's datastore.

Typically at this point, we would start a local version of MongoDB or start looking through the project to find out where our applications will be looking for MongoDB.

Then we would start each of our microservices independently and then finally start the UI and hope that the default configuration just works.

This can be very complicated and frustrating. Especially if our micro-services are using different versions of node.js and are configured differently.

So let's walk through making this process easier by dockerizing our application and putting our database into a container.

Dockerizing Applications

Docker is a great way to provide consistent development environments. It will allow us to run each of our services and UI in a container. We'll also set up things so that we can develop locally and start our dependencies with one docker command.

The first thing we want to do is dockerize each of our applications. Let's start with the microservices because they are all written in node.js and we'll be able to use the same Dockerfile.

Create Dockerfiles

Create a Dockerfile in the notes-services directory and add the following commands.

This is a very basic Dockerfile to use with node.js. If you are not familiar with the commands, you can start with our getting started guide. Also take a look at our reference documentation.

Building Docker Images

Docker Setup

Now that we've created our Dockerfile, let's build our image. Make sure you're still located in the notes-services directory and run the following command:

docker build -t notes-service .

Now that we have our image built, let's run it as a container and test that it's working.

docker run --rm -p 8081:8081 --name notes notes-service

Looks like we have an issue connecting to the mongodb. Two things are broken at this point. We didn't provide a connection string to the application. The second is that we do not have MongoDB running locally.

At this point we could provide a connection string to a shared instance of our database but we want to be able to manage our database locally and not have to worry about messing up our colleagues' data they might be using to develop.

Local Database and Containers

Instead of downloading MongoDB, installing, configuring and then running the Mongo database service. We can use the Docker Official Image for MongoDB and run it in a container.

Before we run MongoDB in a container, we want to create a couple of volumes that Docker can manage to store our persistent data and configuration. I like to use the managed volumes that docker provides instead of using bind mounts. You can read all about volumes in our documentation.

Let's create our volumes now. We'll create one for the data and one for configuration of MongoDB.

docker volume create mongodb

docker volume create mongodb_config

Now we'll create a network that our application and database will use to talk with each other. The network is called a user defined bridge network and gives us a nice DNS lookup service which we can use when creating our connection string.

docker network create mongodb

Now we can run MongoDB in a container and attach to the volumes and network we created above. Docker will pull the image from Hub and run it for you locally.

docker run -it --rm -d -v mongodb:/data/db -v mongodb_config:/data/configdb -p 27017:27017 --network mongodb --name mongodb mongo

Okay, now that we have a running mongodb, we also need to set a couple of environment variables so our application knows what port to listen on and what connection string to use to access the database. Torrent downloader compatible with catalina download. We'll do this right in the docker run command.

docker run

-it --rm -d

--network mongodb

--name notes

-p 8081:8081

-e SERVER_PORT=8081

-e SERVER_PORT=8081

-e DATABASE_CONNECTIONSTRING=mongodb://mongodb:27017/yoda_notes notes-service

Let's test that our application is connected to the database and is able to add a note.

curl --request POST

--url http://localhost:8081/services/m/notes

--header 'content-type: application/json'

--data '{

'name': 'this is a note',

'text': 'this is a note that I wanted to take while I was working on writing a blog post.',

'owner': 'peter'

}

You should receive the following json back from our service.

{'code':'success','payload':{'_id':'5efd0a1552cd422b59d4f994','name':'this is a note','text':'this is a note that I wanted to take while I was working on writing a blog post.','owner':'peter','createDate':'2020-07-01T22:11:33.256Z'}}

Conclusion

Awesome! We've completed the first steps in Dockerizing our local development environment for Node.js.

In Part II of the series, we'll take a look at how we can use Docker Compose to simplify the process we just went through.

In the meantime, you can read more about networking, volumes and Dockerfile best practices with the below links:

- 1Installation

- 4'WARNING: No {swap,memory} limit support'

Installation

The Docker package is in the 'Community' repository. See Alpine_Linux_package_management how to add a repository.

Connecting to the Docker daemon through its socket requires you to add yourself to the `docker` group.

To start the Docker daemon at boot, see Alpine_Linux_Init_System.

For more information, have a look at the corresponding Github issue.

Anyway, this weakening of security is not necessary to do with Alpine 3.4.x and Docker 1.12 as of August 2016 anymore.

Docker Compose

'docker-compose' is in 'Community' repository since Alpine Linux >= 3.10.

For older releases, do:

To install docker-compose, first install pip:

Isolate containers with a user namespace

and add in /etc/docker/daemon.json

You may also consider these options : '

You will find all possible configurations here[1].

Example: How to install docker from Arch

'WARNING: No {swap,memory} limit support'

You may, probably, encounter this message by executing docker info.To correct this situation we have to enable the cgroup_enable=memory swapaccount=1

Docker Setup.py

Alpine 3.8

git clone git@github.com:pmckeetx/memphis.git

Now that we have the code local, let's take a look at the project structure. Open the code in your favorite IDE and expand the root level directories. You'll see the following file structure.

├── docker-compose.yml

├── notes-service

│ ├── config

│ ├── node_modules

│ ├── nodemon.json

│ ├── package-lock.json

│ ├── package.json

│ └── server.js

├── reading-list-service

│ ├── config

│ ├── node_modules

│ ├── nodemon.json

│ ├── package-lock.json

│ ├── package.json

│ └── server.js

├── users-service

│ ├── Dockerfile

│ ├── config

│ ├── node_modules

│ ├── nodemon.json

│ ├── package-lock.json

│ ├── package.json

│ └── server.js

└── yoda-ui

├── README.md

├── node_modules

├── package.json

├── public

├── src

└── yarn.lock

The application is made up of a couple simple microservices and a front-end written in React.js. It uses MongoDB as it's datastore.

Typically at this point, we would start a local version of MongoDB or start looking through the project to find out where our applications will be looking for MongoDB.

Then we would start each of our microservices independently and then finally start the UI and hope that the default configuration just works.

This can be very complicated and frustrating. Especially if our micro-services are using different versions of node.js and are configured differently.

So let's walk through making this process easier by dockerizing our application and putting our database into a container.

Dockerizing Applications

Docker is a great way to provide consistent development environments. It will allow us to run each of our services and UI in a container. We'll also set up things so that we can develop locally and start our dependencies with one docker command.

The first thing we want to do is dockerize each of our applications. Let's start with the microservices because they are all written in node.js and we'll be able to use the same Dockerfile.

Create Dockerfiles

Create a Dockerfile in the notes-services directory and add the following commands.

This is a very basic Dockerfile to use with node.js. If you are not familiar with the commands, you can start with our getting started guide. Also take a look at our reference documentation.

Building Docker Images

Docker Setup

Now that we've created our Dockerfile, let's build our image. Make sure you're still located in the notes-services directory and run the following command:

docker build -t notes-service .

Now that we have our image built, let's run it as a container and test that it's working.

docker run --rm -p 8081:8081 --name notes notes-service

Looks like we have an issue connecting to the mongodb. Two things are broken at this point. We didn't provide a connection string to the application. The second is that we do not have MongoDB running locally.

At this point we could provide a connection string to a shared instance of our database but we want to be able to manage our database locally and not have to worry about messing up our colleagues' data they might be using to develop.

Local Database and Containers

Instead of downloading MongoDB, installing, configuring and then running the Mongo database service. We can use the Docker Official Image for MongoDB and run it in a container.

Before we run MongoDB in a container, we want to create a couple of volumes that Docker can manage to store our persistent data and configuration. I like to use the managed volumes that docker provides instead of using bind mounts. You can read all about volumes in our documentation.

Let's create our volumes now. We'll create one for the data and one for configuration of MongoDB.

docker volume create mongodb

docker volume create mongodb_config

Now we'll create a network that our application and database will use to talk with each other. The network is called a user defined bridge network and gives us a nice DNS lookup service which we can use when creating our connection string.

docker network create mongodb

Now we can run MongoDB in a container and attach to the volumes and network we created above. Docker will pull the image from Hub and run it for you locally.

docker run -it --rm -d -v mongodb:/data/db -v mongodb_config:/data/configdb -p 27017:27017 --network mongodb --name mongodb mongo

Okay, now that we have a running mongodb, we also need to set a couple of environment variables so our application knows what port to listen on and what connection string to use to access the database. Torrent downloader compatible with catalina download. We'll do this right in the docker run command.

docker run

-it --rm -d

--network mongodb

--name notes

-p 8081:8081

-e SERVER_PORT=8081

-e SERVER_PORT=8081

-e DATABASE_CONNECTIONSTRING=mongodb://mongodb:27017/yoda_notes notes-service

Let's test that our application is connected to the database and is able to add a note.

curl --request POST

--url http://localhost:8081/services/m/notes

--header 'content-type: application/json'

--data '{

'name': 'this is a note',

'text': 'this is a note that I wanted to take while I was working on writing a blog post.',

'owner': 'peter'

}

You should receive the following json back from our service.

{'code':'success','payload':{'_id':'5efd0a1552cd422b59d4f994','name':'this is a note','text':'this is a note that I wanted to take while I was working on writing a blog post.','owner':'peter','createDate':'2020-07-01T22:11:33.256Z'}}

Conclusion

Awesome! We've completed the first steps in Dockerizing our local development environment for Node.js.

In Part II of the series, we'll take a look at how we can use Docker Compose to simplify the process we just went through.

In the meantime, you can read more about networking, volumes and Dockerfile best practices with the below links:

- 1Installation

- 4'WARNING: No {swap,memory} limit support'

Installation

The Docker package is in the 'Community' repository. See Alpine_Linux_package_management how to add a repository.

Connecting to the Docker daemon through its socket requires you to add yourself to the `docker` group.

To start the Docker daemon at boot, see Alpine_Linux_Init_System.

For more information, have a look at the corresponding Github issue.

Anyway, this weakening of security is not necessary to do with Alpine 3.4.x and Docker 1.12 as of August 2016 anymore.

Docker Compose

'docker-compose' is in 'Community' repository since Alpine Linux >= 3.10.

For older releases, do:

To install docker-compose, first install pip:

Isolate containers with a user namespace

and add in /etc/docker/daemon.json

You may also consider these options : '

You will find all possible configurations here[1].

Example: How to install docker from Arch

'WARNING: No {swap,memory} limit support'

You may, probably, encounter this message by executing docker info.To correct this situation we have to enable the cgroup_enable=memory swapaccount=1

Docker Setup.py

Alpine 3.8

Well I'm not sure it wasn't the case before but for sure with Alpine 3.8 you must config cgroups properly

Warning: This seems not to work with Alpine 3.9 and Docker 18.06. Follow the instructions for grub or extlinux below instead.

Grub

Well; if you use Grub it is like any other linux and you just have to add the cgroup condition into /etc/default/grub, then upgrade your grub

Extlinux

With Extlinux you also add the cgroup condition but inside /etc/update-extlinux.conf

than update the config and reboot

update-extlinux

How to use docker

The best documentation for how to use Docker and create containers is at the main docker site. Adding anything more to it here would be redundant.

if you create an account at docker.com you can browse through other user's images and learn from the syntax in contributor's dockerfiles.

Official Docker image files are denoted by a blue ribon on the website.